About AEStream¶

AEStream aims to simplify access to event-based neuromorphic technologies, motivated by two problems:

the overhead to interact with any neuromorphic technologies (event cameras, neuromorphic chips, machine learning models, etc.) and

incompatibilities between neuromorphic systems (e. g. streaming cameras to SpiNNaker).

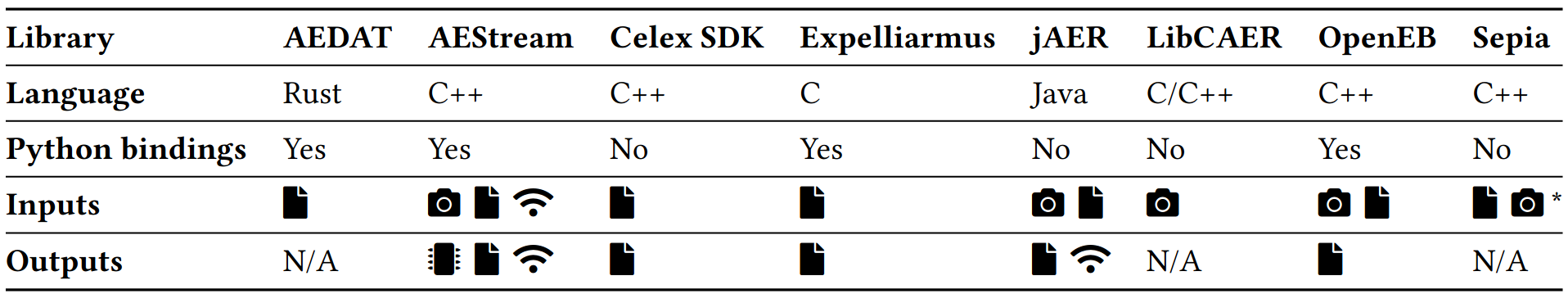

The biggest innovation in AEStream is to converge on a single, unifying data structure: address-event representations (AER). By insisting that each input and output operates on the same notion of event, we are free to compose any input with any output. And adding any peripheral is as simple as translating to or from AER.

AEStream¶

A paper on AEStream was published at the Neuro Inspired Computational Elements Conference in 2023, available as a preprint on arXiv: https://arxiv.org/abs/2212.10719. In a streaming setting, the paper concludes:

In sum, AEStream reduces copying operations to the GPU by a factor of at least 5 and allows processing at around 30% more frames (65k versus 5k in total) over a period of around 25 seconds compared to conventional synchronous processing.

Developer affilications and acknowledgements¶

AEStream is an inter-institutional and international effort, spanning several research institutions and compa. Particularly, AEStream has received contributions from

KTH Royal Institute of Technology

University of Heidelberg

University of Groningen

Heriot-Watt University

European Space Agency

The developers would like to thank Anders Bo Sørensen for his friendly and invaluable help with CUDA and GPU profiling. Emil Jansson deserves our gratitude for scrutinizing and improving the coroutine benchmark C++ code.

We gracefully recognize funding from the EC Horizon 2020 Framework Programme under Grant Agreements 785907 and 945539 (HBP). Our thanks also extend to the Pioneer Centre for AI, under the Danish National Research Foundation grant number P1, for hosting us.